#21 Docker system pruning, PHP Cloud Functions, and more

Welcome to the 21st issue of The VOLT newsletter! Here's a few things from the last couple weeks:

Just a head’s up, I've decided to put together a Discord server! If you're interested in web dev and want to learn, share, and collaborate with myself and others, I'd love it if you joined.

Free up missing hard drive space taken up by Docker

If you’re like me and use Docker on a regular basis, you might have gigabytes of storage space that’s being taken up by Docker’s image, container, or build caches. Finding out just how much and clearing it out is pretty straightforward, and just requires a couple of commands in your terminal.

First, run the following to get an overview of the total storage used by Docker:

docker system df

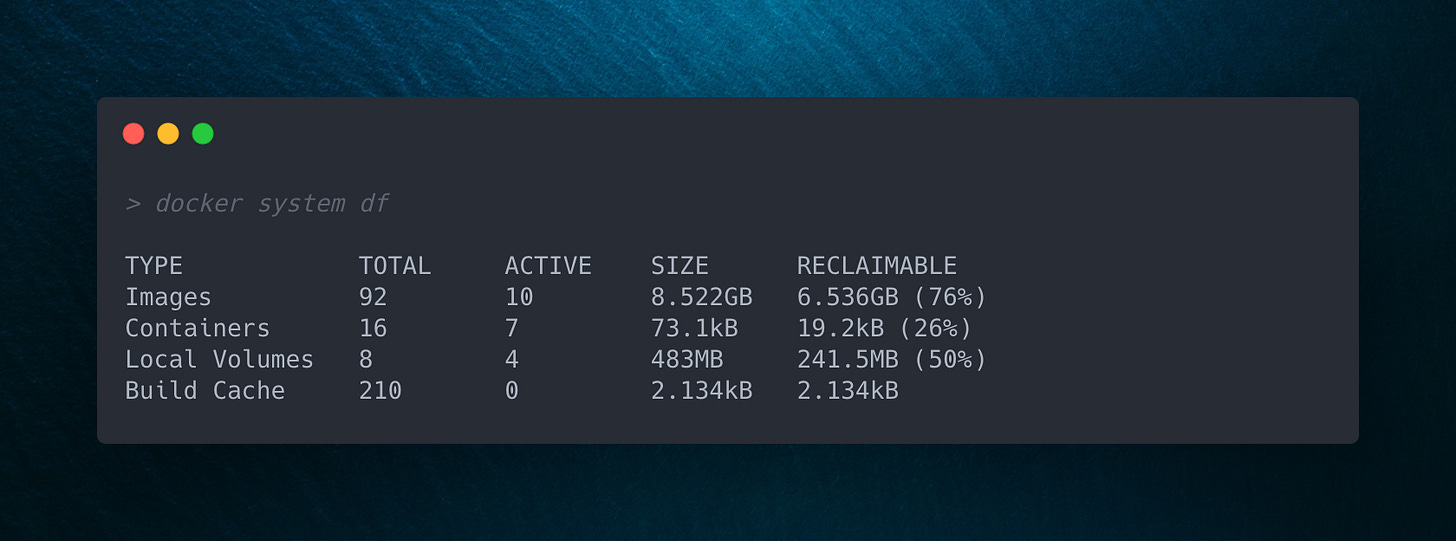

You should see something like the following:

Then run the following command to clear out any unused containers, leftover build items, and other miscellaneous data collected on your system:

docker system prune —volumes

After anywhere from a few seconds to a few minutes, you should see a list of deleted volumes, images, and build cache objects, as well as your total reclaimed space. The first time I ran this, that was just over 10GB!

I recommend doing this once a week or so to keep the system resource usage by Docker low.

PHP have landed in Google’s Cloud Functions

Cloud Functions are basically Google’s version of AWS Lambda, allowing you to execute individual scripts in a serverless manner, without having to provision a container or hosting environment for them.

Prior to this addition, you were limited to using a small subset of languages:

Node

Java

.NET

Ruby

Python

Go

But that’s all changed since PHP has been officially added! This is pretty big news for the serverless PHP community, since AWS Lambda doesn’t (currently) let you use PHP natively on that service. Instead, you have to build your own custom runtime or use a container image.

With this addition you can now just natively use PHP code inside of a Cloud Function! Want to get started? Head over and check out the documentation here to start creating your first serverless function.

Reference git commits by message instead of hash

Last week I really wanted to find a specific commit for a repo that I had made, but it was a while back (and this repository is updated pretty frequently). I knew a phrase that I had used in the commit message, but other than that my only real option was just scrolling through waves of git logs.

Luckily, there’s an easier way! You can use the following command to search through your entire repo’s git history and return logs that only contain test string (or whatever you’re looking for). Just run:

git log —all —grep=’test string’

You’ll get a modified git log returned back, containing just the list of commits that have test string included in the commit message or branch name.

Save all Laravel Eloquent relationship attributes

Let’s say you have an Eloquent model in Laravel called Shop, with a hasMany relationship of Item models. You’d like to update just the first Item model’s price, as well as the Shop’s name. Instead of running two update or two save queries, you can do this at once with Laravel’s push() method.

Using the above method, we can update the Shop model’s name, the Item model’s price, and even the Item model’s attached model, Manufacturer name. Afterwards we call push() on the original Shop model and its attributes, as well as all of its related model’s attributes, have their changes saved.

Today I learned

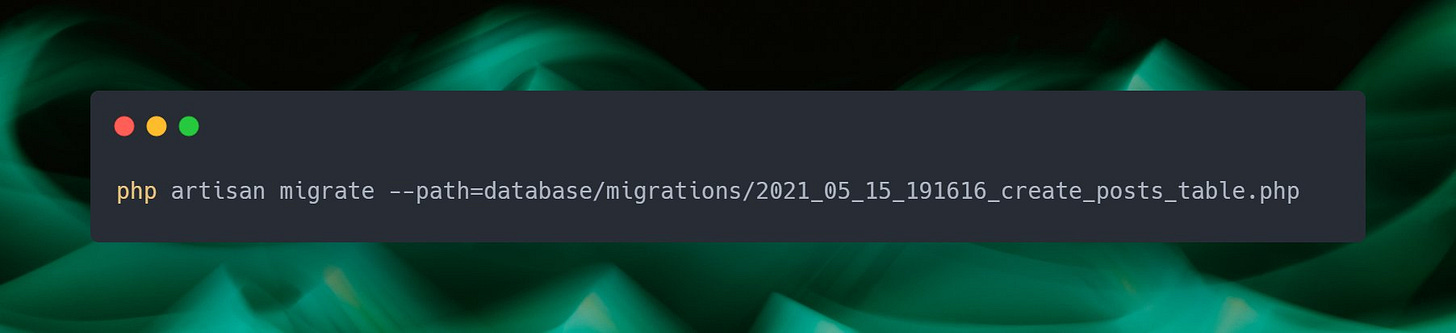

Want to just run just a single migration in your Laravel application? By using the path attribute and passing in the migration file, you can! Using the command below will only run the migration changes available in that one file.

That’s it for now! If you have any questions about the above, or have something you’d like me to check out, please feel free to let me know on Twitter.

Thanks for this article, also refer https://www.redswitches.com/blog/install-docker-in-centos/ if you are looking to install docker on centos.

Thanks for the docker commands! I needed those today, so great timing!